Increasingly, researchers and artists are tinkering with machine-learning software to explore how neural networks can express creativity, whether through generating contemporary paintings or paint swatch names. Designer Philipp Schmitt decided to program a computer to produce a photobook, a process that involved curation as well as creation. The result, he believes, teaches us how to see our surroundings from a new perspective — “through the eyes of an algorithm,” in his words.

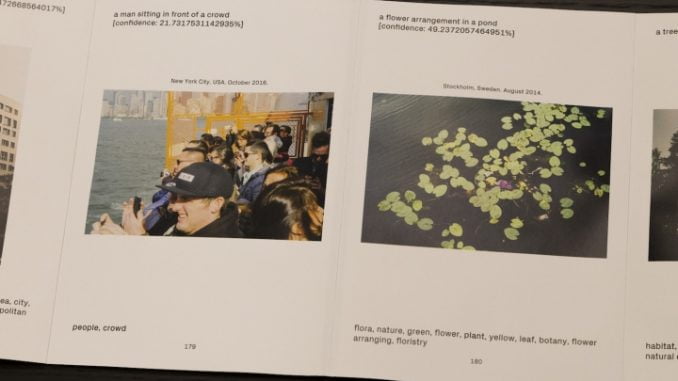

Drawn from Schmitt’s own archive of 207 photographs taken between 2013 and 2017, “Computed Curation” is a 95-foot-long, accordion photobook that includes captions and tags. But you’ll quickly notice that these are often odd strings of companion texts. A photograph of the back of two men’s heads, for instance, is captioned, “a man with a frisbee”; tags include, “people, man, male, ceremony, wedding, groom.” Another picture shows a person whose face is covered with a long leaf, held up by the cameraman. Its caption: “person holding a wii remote in the hand”; among the tags: “surfing equipment and supplies,” “toy,” and “carving.”

These clusters can be amusing — and it doesn’t hurt that Schmitt’s images are often coyly humorous — but they also speak to how machines read and understand our human world. Schmitt’s photobook (which is handbound, as a nice, human touch) highlights the errors that often pop up in machine-learning and AI experiments; paradoxically, by doing so, it reveals the shortcomings in the human minds that produced these tools.

“Their flaws are often of technical nature, show their political/racial/cultural biases, or are just the result of [people] using them wrong,” Schmitt told Hyperallergic. For instance, the aforementioned image of a big leaf, captioned as a Wii controller, is evidence of how software from Silicon Valley is engineered with biases. As Trevor Paglen noted in an essay for The New Inquiry, neural networks tend to identify anyone holding an object as a person holding a burrito or a Wii remote — things the San Francisco Bay Area is known for. Schmitt also included a “confidence” number below each image caption, which represents, as a percentage, how certain Microsoft’s neural network was of the caption’s accuracy. Most of the percentages fall below 60%; the highest is ~92.78%, for “a man riding a wave on a surfboard in the ocean.”

“But I noticed that if you’re willing to consider the algorithm’s flaws as kind of ‘poetic computation,’ you can discover new meaning (intrinsic, or projected by me?), have fun, and see things in a new way,” Schmitt added. “That’s something I wanted to explore with the book.”

One of his favorite images is “a crowd of people watching a large umbrella”, which he described as “beautifully wrong.” It captures the crowd at one of the famous karaoke sessions that occurs at Berlin’s Mauerpark, facing the stage, where a pink umbrella shades a performer. “a dog sitting on a sidewalk” presents another particularly telling image. In the aerial shot, a woman in Los Angeles is shown walking her child on a leash, a visual trope the computer understood as a human out with her dog.

Schmitt collected the captions and tags using computer vision services from Microsoft and Google, and used the histogram of oriented gradients to analyze the images composition. These components, along with color, were then considered by the learning algorithm, which sorted the images according to similarities to yield a scattered map of photographs, where images with similar features were situated closer to one other. He then used another algorithm to connect the images in a path that would run through all of them in an arrangement that requires the least distance, thereby setting the photobook’s page order. You can check out the process, recorded in his video below:

Computed Curation — Generator from Philipp Schmitt on Vimeo.

The matched images and words are entertaining enough to peruse, but it’s also interesting to consider the computer-curated package as a whole. As I progressed through the book, I was compelled to think about the logic behind the sequence — a beach follows rocks because the landscapes share the same browns and textures; a streetscape of Berlin is sandwiched between Ireland’s green Cliffs of Moher and a Croatian beach because the images all follow the rule of thirds, with a hazy strip topping each off. The accordion format of the book — which you can experience, in a limited sense, online — is a thoughtful layout to drive this exercise as it allows you to examine the series as a continuous line of any amount of images you’d like, rather look at, say, two images facing each other on separate pages.

So while “Computed Curation” may seem like it cuts out the human editor, it actually exemplifies how creative tools can assist the human eye. Flipping through it, firstly, helps you become aware of how your own eyes has been trained to read images. And Schmitt believes that studying a computer-curated photobook can enhance an editor’s creative practice as it may highlight patterns that they might have otherwise overlooked. Plus, he urged, the program can never replace a real editor, as it could never anticipate how a sequence could provoke emotions from a human reader, which is why photobooks capture our attention in the first place.