Folks with more coding knowledge than I have (and a greater willingness to potentially brick a $2,400 machine) have been hooking up external graphics cards to Macs for years, but the support now come bundled into macOS 10.13.4 High Sierra. In layman’s terms, Apple officially supports some graphics cards that you’d normally only find in a bulky PC tower—so long as you have a separate external chassis to stick them in and a Mac with Thunderbolt 3.

I hoped eGPU support would be revolutionary. Beyond that, I hoped it’d allow me to break with PCs entirely, as I really only use them for gaming these days. Anyone who watches Apple Arcade knows I’ve been a little frustrated with the current state of Mac gaming, and an external graphics card struck me as an easy way to circumvent the limitations of Apple’s built-in processors.

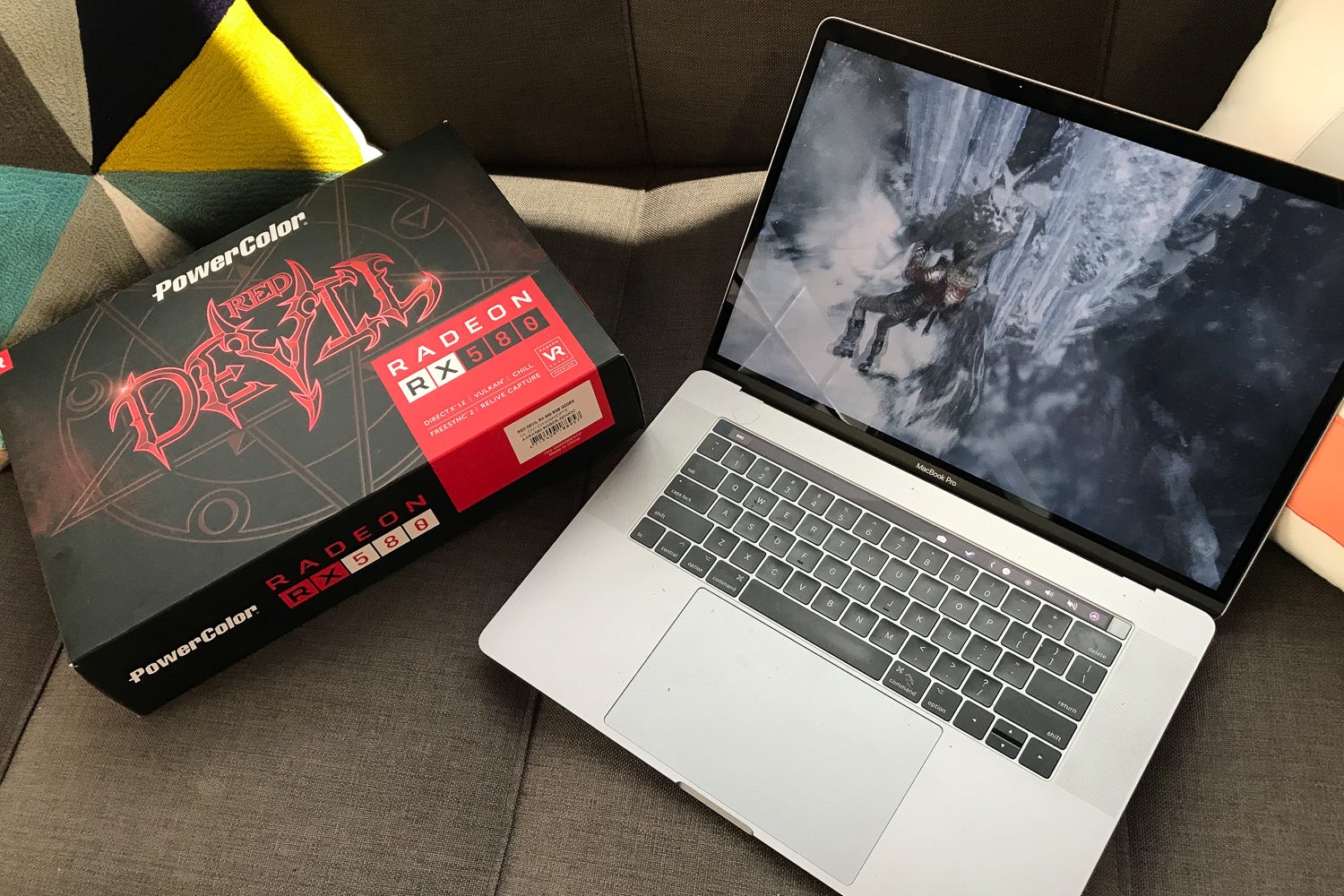

In some ways, it is. On the last show I took an AMD Radeon RX 580 graphics card and slipped it in a spare eGPU chassis loaned from the folks at PCWorld, and I watched in awe as the recently released port of Rise of the Tomb Raider suddenly looked the way it was supposed to on my 2017 15-inch MacBook Pro. Once everything was on the table, setup only took around five minutes.

That’s the abridged version. Yes, it works. Though in practice, eGPU support is currently little more than an expensive novelty.

Contents

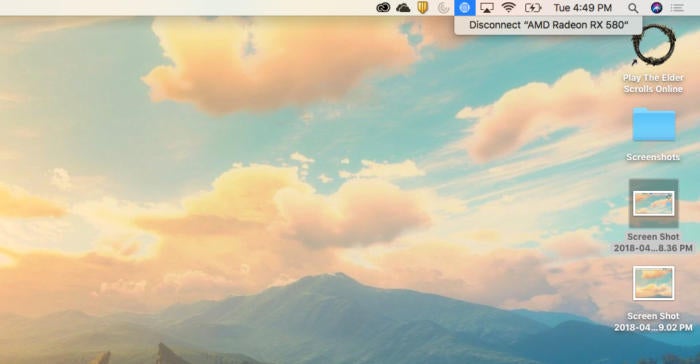

Stay within the lines

Let’s focus on the best part first. Once I slipped my Radeon RX 580 into an Akitio Node Pro chassis and tightened the screws, all I really needed to do was plug the Thunderbolt 3 cable into my MacBook Pro. Within seconds, an icon resembling a processor popped up on the Mac’s top menu bar, showing that the Radeon RX 580 was, in fact, working. (Getting it to work with games takes a few more steps, but more on that later.) Even better, I didn’t even have to restart. Apple prides itself on elegant simplicity, and in this case Steve Jobs’ favorite old saying remains true: It just works.

It works, that is, so long as you have the right materials. You can only pull this off without any technical trickery so long as you’re using a MacBook or iMac with Thunderbolt 3 support, which means you’re limited to using laptops dating from 2016 and iMacs dating from mid-2017. This is a bit of a bummer, but Thunderbolt 3 supports data transfers of up to 40Gbps, while Thunderbolt 2 supports 20Gbps.

Unfortunately, that limitation likely knocks a lot of users out of the game right there. For those of you who can play with that kind of power, though, let’s move on to the supported cards. Here you’ll find your gaming ambitions further thwarted by Apple’s lack of direct support for Nvidia cards. It makes some sense considering that AMD makes most of the graphics cards found in contemporary Macs, but it’s another low blow in a gaming environment where Nvidia cards win mountains of accolades.

If you’re wondering, I tried using Nvidia cards, but there’s no built-in driver support. I plopped an Nvidia GeForce GTX 1060 into the eGPU chassis, connected it, booted, and nada. The little processor icon didn’t show up. It just didn’t work. I also tried using Nvidia’s web driver that’s partially designed with macOS in mind, thinking I’d hit on a way to make it work. But nope. The Nvidia toolbar icon showed up, but the card itself never worked. Perhaps a different chassis would have helped.

In fact, Apple specifically outlines which chassis you’ll need for each card, so be sure to look over the associated support page. The Cupertino company is especially fond of the $449 650W Sonnet eGFX Breakaway Box, which works for every available card ranging from comparatively weak Radeon RX 470 to the blazingly fast Radeon Pro WX 9100. (You can also buy a 350w Sonnet box that’s bundled with a version of the RX 580 I used.)

There’s apparently some wiggle room with the chassis. Apple doesn’t officially recommend the Akitio Node Pro chassis I used, but it worked beautifully for our purposes. For safety, though, I’d recommend sticking with what Apple tells you to stick with.

Poetry in motion

All this effort feels kind of worth it once you see the results in motion. Hooked up to the Radeon RX 580 in its chassis, Rise of the Tomb Raider soared from the 24 frames per second it struggled to reach on the MacBook Pro’s built-in graphics to a far more satisfying 57 frames or more.

This wowed me in our benchmarking tests we ran using RotTR‘s built-in tool, but the differences were stark and unmissable in action. Granted, it wasn’t always perfect: I’d sometimes see brief freezes in the action, which I interpreted as the inevitable delay involved in getting signals from a remote GPU rather than one that’s jacked straight into the motherboard.

But watching Lara Croft jump from snowy ledges and sneak through desert passages felt natural and fluid with the better graphics card (and better framerates). And this was only with the Radeon RX 580, a $401 card we had on hand here. I’m almost certain I’d be blown away with the results on a $950 Radeon RX Vega 64, but we currently don’t have one.

Yet here’s another caveat. Shortly after this article went live, Feral Interactive contacted me to let me know it doesn’t support eGPUs in any of its games at the moment, although the studio is currently testing combinations of cards and GPUs. Eventually Feral will make an announcement regarding official support. I’ve asked for further clarification, but Feral’s statement must refer to optimized support as the benchmark tests and general gameplay showed clear improvements when using the eGPU. The warning fascinates me. It suggests you may still have trouble with certain made-for-Mac applications even though Apple appears to have designed eGPU support in such a way that official support shouldn’t matter.

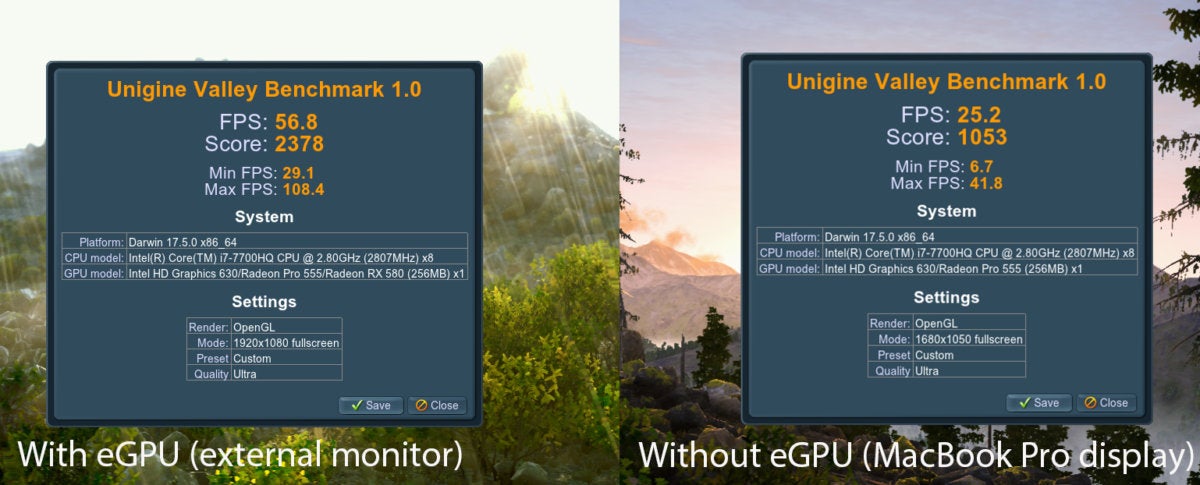

I ran benchmarks using both Unigine and Cinebench with the settings cranked to Ultra, and as you can see above, Unigine saw a massive improvement with the OpenGL API. The new card still wasn’t strong enough to push me past 60 frames per second, though, and the Cinebench results reveal why: In some respects, the Radeon RX 580 is only a tad more powerful than my built-in Radeon Pro 555.

Sometimes, in fact, benchmarks would produce almost identical results for some tests and then the RX 580 would soar far ahead with others. When I tested Apple’s own Metal API with GFXBench, for instance, both versions of the T-Rex test produced framerates of 59 fps. With GFXBench’s Manhattan 3.1 test, though, the RX 580’s 60fps soundly trounced the 555’s 33.8.

In the games themselves, that extra oomph was more than enough to see some clear improvements. I also tried our eGPU setup with World of Warcraftand Elder Scrolls Online and was happy to find myself safely pushing the graphics quality to heights I’d never been able to reach on my MacBook Pro’s discrete card. I’ve also seen reports of eGPUs causing some crashes in some games, but I was fortunate to never see one myself. Even with these issues, though, it occasionally made my MacBook Pro feel like a new machine.

The value of an eGPU

Again, though, it’s only kind of worth it. I’m not really convinced it’s worth around $700 to pick up the chassis and the card we used, but that assessment could change with better (and more expensivse) equipment.

For that matter, the potential costs don’t end there. External GPUs usually don’t actually power the native displays unless a developer specifically allows it, meaning you can’t expect to jack one into your MacBook Pro and see the magic happen right there on the Retina screen. Instead, you’ll have to hook up an external display, so that’s another $160 or so you’re probably looking at. We got the best performance out of our eGPU when we closed the lid on my MacBook Pro, which means you’ll likely need another keyboard to interact with your game if you’re on a laptop. There goes another $50 or so. And since MacBooks typically don’t have a ton of storage space, you’ll may even need a 1TB external hard drive to even host the games. Conservatively, that’s another $55. And heck, if you don’t have a $60 gamepad, toss one of those in there as well.

So congratulations, yes, your MacBook can run games better now, but you’ve potentially spent around $1,000 to get it to that point. Not only that, but you’ve had to sacrifice your (ideally) Zen-like Mac setup for a desk where wires snake across the now-cluttered surface. If this is the point you want to arrive at, you’d probably just be better off slapping down the cash for an iMac Pro or at least a 5K iMac. (And for what it’s worth, Macworld staff writer Jason Cross reported that he was getting better than 60 fps when he ran Rise of the Tomb Raider on his machine without any eGPU magic.)

Who would do this to themselves?

I’ve spent all my time discussing eGPUs in the context of games. But that isn’t what Apple originally had in mind. Instead, eGPUs are a way for developers to harness more power across multiple displays with rapid refresh rates while using graphically demanding apps like Blender (which was clearly compatible with our eGPU). In addition, the extra power makes it easier to edit 360-degree virtual reality projects, as it’s possible to do the coding on the Mac proper and see the results with an HTC Vive on an external monitor. Apple even lets you hook up multiple eGPUs if you wish.

Weirdly, Final Cut Pro X apparently doesn’t use external cards to help with rendering, but there’s a clear advantage to using an eGPU for anyone working in 3D modeling. Beyond that, eGPUs provide a way to keep the powerful iMac Pro comparatively up-to-date once it gets past its prime since its parts can’t be switched out as they can with a PC tower.

But what about folks who just want to play games? I could still see this being an attractive option for someone who does almost all of his or her other work on a MacBook but still likes to play the occasional high-budget game. For most of the day, our hypothetical GPU enthusiast could tote their MacBook to the coffee shop or whatever, where it would do almost all of the tasks it needs to do with competence and style. But when they want to lose themselves in a graphically intensive game for a while, this setup allows them to take that same laptop and briefly transform it into something even more powerful. I admit even I find the idea attractive on some level.

For that matter, for those of you who are still interested in virtual reality, it finally makes the HTC Vive a workable option on less expensive Mac products.

But for everyone else, it’s a hassle, and the feature sometimes feels as though it’s still in beta. You can’t use eGPUs in Windows through Boot Camp, for instance, which means that taking advantage of an Nvidia card through that means is still out of the question. (This feels like a particularly low blow.) Again, you have to hook up an external monitor to even see the effects from the new card. And even if you’re okay with all that, you’re still stuck with the same relatively small library of Mac games, many of which weren’t graphically demanding enough to warrant attaching an eGPU in the first place.

I’m hoping eGPU support is but a work in progress, and that Apple can smooth out the issues in future updates. (Judging by how Apple has been dragging its feet with what seems like relatively simple HomePod patches, I wouldn’t count on them coming soon. On the other hand, official eGPU support came out exactly when Apple said it would.) Right now, the service is but a strong foundation of what it could be, and during this week’s podcast we speculated that Apple’s experiments here may be related to the supposed modular upgrades in the upcoming new Mac Pro. Indeed, the more I think about it, the more I believe eGPU support would be perfect for a new Mac mini, but who knows when we’ll see one of those.

Provided you’re willing to deal with some limitations and some potentially high costs, eGPUs do enhance the Mac gaming experience. You may find, though, that working around those limitations may not be worth the effort.

[“Source-macworld”]