With 1.3 billion daily users, the Facebook site and its apps are the most-used pieces of software in the world. Only a handful of software companies have ascended to a similar echelon of ubiquity, including Microsoft, Google, and Apple. For better or worse, that is the world we now live in, where a large percentage our waking hours is spent interacting with software—and Facebook leads the pack, with the average user spending 50 minutes per day mostly watching videos and liking photos of babies. Television is the only leisure activity in the world that receives more attention than Facebook. And don’t forget that Facebook now owns Instagram and WhatsApp, too.

It is understandable, then, that Facebook cares a lot about the quality of its software. If Facebook pushes out a new version of its Android app with a crashing bug, millions of users could be affected. Those users might be inclined to switch to another social network, or even worse: put down their phone and interact with the real world. The net effect is the same, either way: Facebook’s share of your attention, and thus potential revenue, decreases.

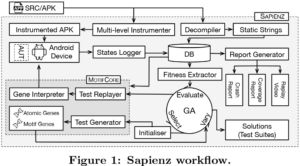

That’s why Facebook has some advanced bug-finding tools—including a devilishly clever dynamic analysis tool, initially devised by students at University College London and then acquired and further developed by Facebook’s London office. This is the first time they’ve shown the inner workings of this new tool, dubbed Sapienz, to the press.

Static vs. dynamic analysis

There are two ways of automatically analysing a piece of software in the hunt for bugs, security vulnerabilities, and other potential issues. Static analysis, as the name implies, is only interested in the source code of the program. Dynamic analysis is the opposite: you run the program, feed it a bunch of inputs, and record how it behaves.

Each technique serves a different purpose, and a big software company would usually use both. Static analysis is perfect for formally verifying that an algorithm works as intended, or for highlighting bad code that might allow for a buffer overflow or other security vulnerability. Dynamic analysis is better at finding the gnarly edge cases that cause crashes. Humans can manually perform both analyses, of course, but computers are obviously a lot quicker when it comes to testing millions of possible inputs.

Facebook’s static analyser is called Infer. The company open-sourced the tool in 2013, and a lot of big names (Uber, Spotify, Mozilla) use it. There isn’t a whole lot to say about it, other than it seems to be very popular and effective; download it today!

Sapienz

There are a lot of dynamic analysers out there, but none like Sapienz, according to Facebook. The biggest problem with dynamic testing is finding the right inputs that cause an app to crash. Facebook says that some dynamic analysers just throw random sequences of inputs at apps, with up to 15,000 input events between crashes. Sapienz, on the other hand, only needs about 100-150 events to find a crashing bug. In practice, that means Facebook finds significantly more crashing bugs in a shorter amount of time.

Sapienz has three main tricks up its sleeve. First, it uses a search-based evolutionary algorithm, rather than a random or model-based approach. Second, the fitness function that guides how the algorithm evolves is incredibly complex: there are multiple objectives, entwined by Pareto optimality, that must be fulfilled for each evolutionary change to be a success. And third, Facebook can run Sapienz on its One World test platform, which lets engineers find crashing bugs on hundreds of different Android devices simultaneously. (Sapienz only supports Android apps currently, though there are plans to expand to other platforms and app types.)

Let’s unpack those points in order, as they all play an important part in the efficacy of Sapienz. Most existing dynamic analysers either throw random inputs at an app, or use some kind of model: a set of test cases that are informed by outside knowledge, such as the app’s header or configuration files. Sapienz uses an evolutionary algorithm that, er, evolves the test inputs based on how the app actually responds to earlier inputs, with the hope of more efficiently finding crash cases.

The key to a successful evolutionary algorithm is its fitness function. I’m not your college computer science lecturer, so I won’t go into too much detail, but a fitness function essentially looks at the result of a test case, and decides how close that result is to a desired outcome/objective. The results that don’t fulfil the fitness function are tied up in a burlap sack and thrown in the river; the good ones are bred together, to form the basis of the next round of testing.

According to Facebook’s engineers, most of their secret sauce is in Sapienz’s fitness function, which has three objectives: to test as many of the app’s methods and statements as possible, find as many crashes as possible, and minimise the length of the test sequences required to cause crashes. The first two are all about producing better, crash-free software; the third is to improve the efficiency of the system, so that a decent number of crashes can be found in a reasonable amount of time.

These three objectives are assessed by the fitness function for Pareto efficiency. That is, one objective isn’t more important than the others: if the evolutionary algorithm is only producing long test sequences, but they’re providing good coverage and finding lots of crashes, then those long tests will be kept alive. Over time the system tries to hit Pareto optimality: where it’s impossible to improve one objective without negatively impacting another. So, in this case, the algorithm would attempt to reduce the test sequence length without reducing coverage or the fault revelation.

[Source”indianexpress”]